Cytomics in Drug Discovery and Development

![[construction icon]](https://www.vanosta.be/images/worker.gif)

Index

- Introduction

- Problems of drug discovery and development - problems at several levels

- Improving drug discovery and development - attempts at several levels

- Problems with disease models - models and the intrahuman ecosystem

- Less questions, but better answers - decomplexification of questions, improving answers

- A cytome project

- A proposal for the exploration of the human cytome - only high level concept

- A framework for the exploration of the human cytome

Introduction

This webpage is meant as a humble contribution to the discussion about improving drug discovery and development, but it is still under construction. It did not go through the traditional peer review process of scientific publications and neither does it pretend to be a critical appraisal of medical evidence, so read it with care and with a critical mind. Feel free to comment and to criticise.

Why would drug discovery and development benefit from cytomics and what are the fundamental problems we face in drug discovery and development ? Cytomics and a coordinated research effort organised in a human cytome project aims at creating a better understanding of the cellular diversity of biological systems and reducing the great divide between present day reductionist models and clinical reality. Cytomics allows us to close the great divide between molecular research and the complexity of the intrahuman ecosystem. There is no clinical evidence to be found in a testtube, so the odds are against basic and applied research in our (pharmaceutical) laboratories and (pre-)clinical pipelines. Understanding the (heterogeneous) cellular level of biological organisation and complexity is (almost) within reach of present day science, which makes cytomics as a science and a human cytome project ambitious but achievable. A human cytome project is all about creating a solid translational science, not from bench to bedside, but from molecule to man. Even more than translational science, it is about transformational science as it transforms the molecular microcosm into the macrocosm of the intrahuman ecosystem and its physiology.

Although the present business model of pharmaceutical discovery and development is capable of coping with massive and late stage failures during the development life cycle of a new drug, it is not the way we should continue to work as the challenges ahead are even bigger than the ones we already faced in the past. The current business model is capable to deliver enough drugs to sustain itself, given the disease mechanisms drug discovery and development deal with, do not surpass a modest level of biological complexity. The present business model simply takes into account the high risk and failure rate of present day science. The scientific engine, from basic to applied research, of the overall process is not yet capable to predictably deliver new drugs which can stand the challenges of biological complexity. At any given moment in time the actual performance of the scientific engine of the process and its scientists has to be taken more or less for granted, and only cost (labor, headcount, process engineering, economy of scale, mergers and acquisitions) and income (price, health insurance, government pricing policies) are available for marginal improvements in process performance (efficiency) and productivity (effectiveness). Business engineering is somewhat easier than engineering the framework of science. We do what we can and what is possible, but not necessarily what is required. We look for solutions where we can find them, but not necessarily where we have to go.

We have to destroy too much capital and human effort to sustain the pipeline, which nowadays resembles a small tube in most companies (to be honest the past was not better either). We compensate for massive scientific failure rates by business model egineering. We have to build a business model on almost catastrofic failure rates compensated by high cost per product, which is only possible in medicine at this rate. But no matter how efficient we engineer our business processes, we cannot hide from the fact that we are running a pipeline process which has too much risk hidden underneath to feel comfortable with as the basis for delivering new treatments for the diseases of our society. Although the pharmaceutical process matrix is in itself consistently organised, it is is slightly out of touch with the complexity of clinical reality. This becomes visible at the end of the pipeline, when truth is finaly forced upon us and we can no longer hide from clinical reality, which extends beyond the frontiers of science. At the intermediate stages the confrontation with reality is only partial and resembles a democracy where the (clinical) opposition is (to be) excluded from voting. A safety test may prove that a candidate drug is safe, but leaves one blind on its efficacy. We cannot deal with all the biological complexity of the intrahuman ecosystem and so we have to withdraw into simplified representations of reality which are too simple as we have to acknowledge at the end of the pipeline in the vast majority of drugs being developed. Expansion of the frontiers of our knowledge is an interesting activity but the process of knowledge expansion in itself is an ineffective and inefficient process. At the intermediary steps we are capable to declare victory because we can set the rules less stringent than those which nature itself pushes through in a real world clinical situation at the end of the game. Life in the lab is therefore easier than life in the clinic, where you have to look the patient in the eye and fight diseases in reality and not in a test tube. Changing the overall process however is a Gargantuan endeavour as it is all about the core scientific engine in the first place, which is almost inert when it comes to paradigm changes.

We are doing our science right, but we are not doing the right science. The success ratio of science, when starting from basic research down to its successful application in the clinic is less than 1 percent. But as scientists have no clue which part of the 100 percent effort will succeed, society has no option but to waste 99 percent of the effort in order to succeed in 1 percent of all research (from basic research to successful application). The main problem is that our basic science is basic in dealing with biological complexity. We look at the intrahuman ecosystem through a keyhole and remain blind for most of its complexity. Although we increasingly automate the scientific process, the process in itself remains largely the same and the effectiveness does not change in the same order of magnitude as its automation which mainly drives the efficiency. We may proceed faster through some stages of the pipeline with our machines, but we still do not reach the goal in most cases. The amount of data being produced increases dramatically, but a pile of numbers does not equal a pile of true understanding of the perceived image of reality which our machines unfold to us providing the view through which we observe and perceive the disease process but still not the reality itself we would need to know and understand. Capacity does not equal performance in terms of results, activities based on the wrong assumptions do not help us in the end. Failing intermediary discovery and development stages leave us with massive failure at the final gate. We postpone the true confrontation with the complexity of nature until the end of the entire process and thereby fail to remedy the intermediary steps (pipeline science is what it is, just live with it). The moment of truth only becomes apparent during the final stages of drug development, buth then it is is already too late. We are good at scientific engineering within the boundaries of our models of biological reality, but rather inefficient in expanding the boundaries of true understanding of the dynamics of biological processes (i.e. the dynamics of biology or multilevel physiology, not just its molecular structure). Present day science, as its predecessors, is far from perfect or up to its clinical challenge, but it is all we have, so for any moment in time we just have to live with it and build our business on top of it.

The dramatic failure rate of science in the face of clinical reality is just one of the parameters in the equation of a business model. Business succeeds even when science fails massively. Pharmaceutical companies just take into account the massive failure rate of (basic) science in their business model. No matter the productivity of science, running a very profitable pharmaceutical business was, is and will always always be feasible, by compensating for the deficits of science by careful management and business engineering (which is somewhat easier than changing the paradigm of science). From the early days of industrial drug discovery and development, management techniques have been conceived and used to create a profitable business, regardless of the shortcomings of science to produce drugs which succeed in the face of clinical reality. Assembling a profitable pipeline can be done either within a traditional monolithic structure (big pharma) or a cluster of risk sharing companies each a part of the overall pipeline. These two business models establish a different risk exposure, but the overall scientific process (the actual engine which drives the pipeline) remains the same. Pharmaceutical management manages risks, which are near catastrophic in drug discovery and development. The science of conceiving the framework and managing a pharmaceutical company is even of a higher order than the scientific engine itself, as it is capable of generating profitability and bring new drugs to the people on top of a scientific process which has less chances to succeed than throwing a dice.

The question is of course, which direction we are capable to take in order to move forward; even more business engineering and automation (increase efficiency) or changing the paradigm and practice of basic and applied science (improve effectiveness), or both ? Although we are becoming increasingly efficient, we do not achieve more effectiveness in the same order of magnitude. Is academia and the industry capable to innovate its core activities ? Is changing such a complex process possible anyhow, while at the same time keeping the business in the air ? What is the value of getting more answers on the wrong questions ? What is the value of our work if the review by nature itself declines the results of our scientific effort ? Is there any hope of an improvement in the productivity of the scientific process itself ?

Do we just have to live with the as is situation of basic and applied science and make the best of it at any given moment in time ? Are we capable to improve the scientific process of knowledge expansion itself or do we continue crawling slowly at the borders of present day scientific boudaries instead of engineering the scientific process itself towards more productivity (truly succeed more often when facing the challenge of nature itself). Scientific practice is always done within the boundaries of the paradigms of the day but not along the lines demanded by nature itself. No matter how far we are today, there is something wrong with the productivity of the scientific method iself in the face of nature. The weakness is not the practice, but the process itself which is flawed towards its performance to find the right answers on complex questions. The process delivers what it is designed for by our science, but not what is required to deal with nature itself in the real clinical world in more than 90 percent of what we (try to) deliver.

As such this article is dedicated to all the patients hoping and waiting for new treatments of unmet medical needs and the improvement of existing therapies. It is also dedicated to all the scientists working in basic and applied research, working day and night to deliver these new drugs and treatments. It deals with process (technology, biology) and model deficits in drug discovery and development at various points throughout the pipeline. Pipeline analysis and engineering is a delicate process as it is required to change the bricks of the house, without destroying it.

The breakthroughs in basic research have not resulted in the creation of many new therapies for patients, which lead to the 'pipeline problem'.

Improving drug discovery and development is not a simple endeavour, as we have seen in recent years. Although this

article is critical about the (evolution of the) overall drug discovery and development process it also honours

the individual contributions of scientists who have discovered and developed drugs which save

and improve the lives of many people. The purpose of critical discussion is to

advance the understanding of the field. While many are spurred to criticize from competitive instincts,

"a discussion which you win but which fails to help ... clarify ... should be regarded as a sheer

loss." (Popper). Let us look at the present with the future in our mind. Although this article may seem

wide ranging and to some shows lack of focus, it is meant to be comprehensive and also show the lack of

focus of many solutions which attempt to solve the pipeline problem by revolving around the core problem.

The problems with drug discovery and development are already leading to international initiatives.

See also

Innovation and Stagnation: Challenge and Opportunity on the Critical Path to New Medical Products - USA,

Innovative Medicines Initiative (IMI) - Europe EU,

New Safe Medicines Faster Project - Europe EU

and the

Priority Medicines for Europe and the World Project "A Public Health Approach to Innovation" - WHO.

Drugs have both a humanitarian value and a financial value. Pharmaceutical research and development

contribute a major part of the research necessary to move new science from the laboratory to the bedside.

Through academic and industry efforts, many new drugs and devices have been developed and marketed,

which save and improve the lives of many people.

However, the costs to bring new drugs to the market have risen sharply in recent years and the output of drug development

and as such the Return On Investment (R.O.I.) has not kept pace. Drug development has always more or less

resembled more of a lottery than a controlled process, due to the lack of basic understanding of biological

complexity in the intrahuman ecosystem. In relation to the effort (cost involved), fewer drugs and biologics are making it

from Phase I clinical trials to the marketplace, which has dramatically increased the cost of drug development

(Crawford L.M., 2004). Late stage failure in

Phase III clinical trials and NDA disapproval has risen from 20% up to 50% (Crawford L.M., 2004).

From an economical perspective, the goal of improvements in drug discovery and development is to increase the

Net Present Value (NPV also

"fair value" or "time value") of pipeline molecules and to decrease the costs associated

with pursuing failed projects.

Basicaly the Net Present Value (NPV) is the worth of a good at the present moment and

for investments the Net Present Value is an important indicator. Only an investment, that offers

you a positive net present value, is considered to be worth to pursue. This has not been the

case in recent years for many drug development projects, as up to 90% fail.

The bottom line form an economical perspective is that in the end

any change in the process or its (scientific) content should improve the

Return on Capital Employed (ROCE).

This article aims at improving the probability of success in drug development (reduce late stage clinical development attrition)

by using better disease models (higher predictive power) in drug discovery an pre-clinical development. This improvement should

lead to bringing better drugs (more effective, less side effects) to the patients, both cheaper and faster.

The challenges which the pharmaceutical industry is facing:

From a business perspective, there are 2 sources of value creation by a more productive discovery processes

("clinical" quality of molecules). Both require a better scinetific engine in the first place:

There are 4 levers for creating value in (pre-)clinical drug development (process improvement):

What should we achieve for the overall drug development process in order to restore its productivity (principles):

What should be the deliverables (targets, quantitative), however ambitious they may seem given the present state of science:

The pharmaceutical industry has a history of initial innovative breakthroughs (first-in-class),

followed by slower, stepwise improvements of such initial successes (best-in-class).

How can we improve the Probability Of Success (POS) of the

overall drug discovery and development process and as such improve both the quality and

quantity of new drugs, both for innovative as well as stepwise improvements? Why do we need

to learn more about the human cytome to

improve drug discovery and development? How can cytome research help us to discover

and develop better drugs with a higher success rate in clinical development?

What is wrong with the drug discovery and development process as it is now, so its costs are

soaring and its R.O.I. is declining? Why do we only succeed in improving success rates somewhat with

biologicals and no longer with small molecules ?

Everyone

managing the discovery and development of drugs has to ask a few questions

about every new scientific idea or technology which pops up (and they do, all the time).

Every scientific idea or technology to be applied to drug discovery and development

must specify realistic and compatible goals and expectations. When we want to

introduce a new scientific idea into drug discovery and development we must

balance between good science and a credible business plan. We must be critical about the promises made.

Is the claim or argument relevant to the overall subject? ('Subject matter' relevance).

Is the argument or claim relevant to proving or disproving the conclusion at hand? ('Probative' relevance).

Personal note

My personal interest in cytomics, grew out of my own work on High Content Screening, as you can see in:

- Image Analysis gives a clear view in research

- Scalespace or Differential Geometry

- Color Differential Geometry or the Spatial Color Model

- Application of linear scale space and the spatial color model in light microscopy

- Automated Tiled Multi-mode Image Acquisition and Processing Applied to Pharmaceutical Research

- The M5 framework for exploring the cytome

Scope of the article

This article deals

with the analyis of several apects of the drug discovery and development process

and the the weak spots and flaws within this process, which cause the high late stage

attrition in the pipeline. The pharmaceutical industry has passed the threshold

where only slight adaptive changes can restore its productivity and profitability.

Reducing costs is not enough to restore the health of the industry, a paradigm shift is needed,

but this will require a new vision on the fundamentals of the drug creating process and the way true

knowledge and understanding is being built on the foundations of scientific discovery and through

applied research.

However important business processes are, this article in itself is not about

Business Process Improvement or Business Process

Reengineering as this is outside the scope of Research Process Improvement and

Research Content Improvement. The processes surrounding the drug discovery and

development process require attention and optimisation too, but in the end

success in drug discovery and development depends on bringing the right drug to

the right patient. Both the drug discovery and development process and its

scientific content require optimisation beyond their current state.

The

scientific content of the drug discovery and development process, goes beyond business management

principles and is more difficult to optimise than the process itself. It is the

present state of basic (reductionistic) and applied science and technology itself, in relation to the complexity of biological systems,

which still limits our chances for success. Inadequate understanding of basic science for certain diseases and the

identification of targets amenable to manipulation is one of the major causes of failure in drug development.

The endpoint of discovery is understanding a complex biological process, not just a pile of molecular data.

The endpoint of development is therapeutic success in man (a complex biological system), not just a molecular interaction.

The level of understanding at the end of drug discovery

(and preclinical development) should achieve a knowledge level which is capable

to predict success at the end of the pipeline much better than we do now.

No matter what is the origin of the

compound under evaluation, or how it came into being, a good description of its in vivo pharmacological properties

is necessary to assess its drug-like potential.

The sooner NCEs or NBEs evolve in a "rich" or lifelike biological environment

(resembling the situation in a human population) the sooner we capture (un-)wanted phenomena.

There is a time-shift between the implementation of a new

approach (linking genes almost directly to clinical diseases) and finding out

about its impact on commercial success, which makes the feedback loop

inefficient due to its long delay in relation to the quarterly and annual

business cycle. From a business perspective, any process can be sped up and

content can be sacrificed or complexity reduced. In a stage-gate process, the stages

deliver the content for the decisions at the gates, so the stages should be informative and

predictive. Processes and portfolio management can be optimised near perfection. This may be provide sufficient leverage

for a (albeit complex) 'nuts and bolts process' (e.g. automotive industry), but not for

processes in a biomedical context when our understanding of pathogenesis and

pathophysiology is still very patchy and

incomplete. We leave a large potential for improvement untapped. Improving

a development process which still fails for 90% of all developmental drugs,

is not optimised at all. With the current inefficient process we are, in most cases, unable to serve smaller patient populations.

If we ever want to reach the goal of personalized medicine, which is in my opinion is beter understood as

succeeding in unraveling the molecular diversity of clinicaly similar disease manifestations, some

conditions need to be fulfilled:

A lot of research and development will be needed to reach this goal, leaving aside the ethical minefield.

The complexity of

intermediary modulation of gene-disease (un-)coupling was clearly underestimated in recent years.

In the early stages of drug discovery, the data tend to be reasonably black and white.

As you get to more multifactorial information and more complex systems later on in drug discovery and development,

that becomes less true. Managing this complexity in a coherent way is a challenge we must deal with

in order to be successful. So how can we facilitate (and understand) the flow through the pipeline,

without generating empty downstream flow in clinical development? How to plug accelerators into the drug discovery and

(pre-)clinical development pipeline which prove their value onto the end of the pipeline? How

do we create a true Pipeline Flow Facilitation (PFF) process?

The first part of this article shows the problems of the drug discovery and development process.

It shows the present problems of the pharmaceutical industry.

The second part of this article looks for the best

way to improve the drug discovery and preclinical development process as these feed clinical development

with drug candidates which should make it to the right patients.

The third part of this article deals with the problems with

disease models in drug discovery and preclinical development and why they cause so much late stage

attrition later on in clinical development.

Overview of related articles on this website

Personal

interest and background where I provide som information how the idea for

a Human Cytome Project (HCP) has grown over time.

References

have been put together on one page.

Overview of problems and questions

Scientific background about

the Human Cytome Project idea can be found here

The potential impact on the

efficiency of drug discovery and development where I give an analysis

of the reasons for the unacceptable high attrition rates in drug development which

have now reached 9O%. Our preclinical disease models are failing, they look back

instead of forward towards the clinical disease process in man.

Overview of solutions and suggestions

A proposal of how to explore the human cytome where I give an overview of

the deliverables and the scientific methods which are (already) avalable.

How to deal with the analysis of the cytome in order to improve our understanding of

disease processes is being dealth with in another article. The

first part deals with the problems of

analyzing the cytome at the appropriate level of biological organization.

The second part deals with the ways of

exploring and analyzing the cytome at the multiple levels of biological organization.

A concept for a software

framework for exploring the human cytome is a high-level concept for large scale

exploration of space and time in cells and organisms.

Some thoughts on the pitfalls of applied research

The vulnerability of applied research, such as drug discovery and development, is hidden in the basics of scientific reasoning. In traditional Aristotelian logic,

deductive reasoning is inference in which the conclusion is of no

greater generality than the premises, as opposed to abductive and

inductive reasoning, where the

conclusion is of greater generality than the premises. Other theories of logic define deductive

reasoning as inference in which the conclusion is just as certain as the premises, as opposed to

inductive reasoning, where the conclusion can have less certainty than the premises. Scientific research is

to a large extent based on inductive reasoning and as such vulnerable to overenthusiastic generalizations and simplifications.

There is a lot more to say on the philosophy of science and its impact on research, but this is outside the scope of this article.

In addition the discussion about the problems of the drug discovery and development process is full of

red herrings and other

logical fallacies, which

distracts our attention from the real question: does the treatment work in man

(see also Organon from Aristotle).

Ignoratio elenchi

(also known as irrelevant conclusion) is the logical fallacy of

presenting an argument that may in itself be valid, but which proves or supports a

different proposition than the one it is purporting to prove or support (The promise

to the pharmaceutical industry "do this" or "buy that" and you

will deliver more and better drugs to the market). The ignoratio elenchi fallacy is

an argument that may well have relevant premises, but does not have a relevant conclusion.

The Red Herring fallacy is the counterpart of the ignoratio elenchi where the explicit

conclusion is relevant but the premises are not, because they actually support something else.

The complex relation between the input of

the drug discovery and development process (manpower, methodology and technology) and its output (drugs which succeed)

is underestimated, leading to unacceptable late stage attrition rates.

However, there are no simple answer to complex problems, such as how to create a truly productive process, both

effective and efficient.

The truth is forced upon us during the late stages of clinical development, when we fail because of a lack of

predictive power of discovery and preclinical development.

Like most opportunistic enterprises, pharmaceutical companies run the managerial risk of succumbing

to the enthusiastic optimism of a pragmatic fallacy.

Leading scientists and managers have to understand and systematically manage ambiguity in an

increasingly complex environment. There is more ambiguity in clinical reality and economical reality

than in an Eppendorf tube. You have to assess risk and benefits of decisions and anticipate

the impact on drug discovery and development in the longer term, far beyond the short-term quarterly goals.

Those who take responibility for strategic management have to grasp opportunities capable of

generating new opportunities for improving drug discovery and development in a productive way.

You have to scan the scientific, business and regulatory environment and think well ahead to identify things

which may get in the way of meeting objectives - either obstacles or changes in the overall situation.

Managers and scientists have to develop complex strategies which take into account the diverse interests across scientific domains,

economics, rules and regulations. It does not help that scientists prefer the scientific excitement of

reading Nature and Science, while

managers prefer the Wall Street Journal and the

Financial Times. There is a lack of cross-discipline

understanding and colaboration throughout the entire process (not enough silo busters). The true challenge is

to appreciate that the discovery, development, application, and regulation of the target to drugs pipeline

has to be viewed as integral processes with each element having important, sometimes critical,

implications on the other components with decisions weighed accordingly.

Drug discovery and development

Evolution of process and content performance

Figure 1: Evolution of sales for some big pharmaceutical companies. Source: Yahoo finance and other sources |

Figure 2: Evolution of earnings for some big pharmaceutical companies. Source: Yahoo finance and other sources |

Figure 3: Evolution of earnings as ratio of sales for some big pharmaceutical companies. Source: Yahoo finance and other sources |

Figure 4: NYSE Pharma shares of Merck &Co. (NYSE:MRK), Pfizer (PFE), Eli Lilly (LLY), GlaxoSmithkline (GSK) and Bristol-Myers Squibb (BMY). _DJI (Dow Jones Ind.), _IXIC (Nasdaq). Source: Yahoo finance |

The graph in Figure 1 shows the evolution of sales for some big pharmaceutical

companies from 1999 until 2004. Pfizer, Johnson & Johnson and GSK show an increase ahead of the others.

Merck suffered from the problems associated with Vioxx, which illustrates the fragility of commercial

sucess. The graph in Figure 2 shows the evolution of net earnings from 1999 to 2004.

Here there is less difference between the companies, no company is able to outperform the others in a

dramatic way. The graph in Figure 3 shows the evolution of the percentage net earnings to

sales. On average there is a steady decline from 19.5% in 1999 down to 14.0% in 2004.

The graph in Figure 4 shows that in recent years the growth of the

pharmaceutical industry has slowed down. The pharmaceutical industry found themselves in a tight

spot in the beginning of the 21st century. The sector has seen a decrease in financial performance

following a boom period in the 1990s, fueled by a succession of drugs with sales over US$

1 billion per year (blockbusters). As all drug companies improved their stock value, the cause is common

to all of them and not to one company outperforming the other companies. Of all the knowledge required to develop

a new drug, the most important component is a true understanding of the molecular basis of the disease process.

This knowledge is mainly in the public domain and available for all companies, so no company is capable of

outperforming its peers in the long run. Although it may take up to 15 years to develop a new drug,

it may take up to 20-30 years to unravel the mechanism of a disease and this requires an effort on

a global scale, not just of a single pharmaceutical or biotech company. Almost 90% of all science required

to create a drug for a given disease, resides outside the pharmaceutical industry, but 90% of the risk is

hidden within the inefficient process used within the pharmaceutical industry (90% failure in drug development).

The industry faces the final challenge of proving that the ideas about a disease process truly work

in the complex biosystem of man.

The drug discovery and development process suffers from "particularism" and lack of "generalism"

as the success with one drug does not lead to a consistent increase of performance. Working on improving the process as it

exists today within the pharmaceutical and biotech industry is only leveraging 10% of the required knowledge-base.

A failure rate of 90% for 10 or 50 drugs in the development pipeline, is not an example of process improvement,

although the bigger pipeline will lead to a five-fold increase of drugs reaching the market.

How can the pharmaceutical industry

get out of the current situation of spiraling costs and reduced R.O.I?

There is no simple answer to this question, as a solution requires improvements in multiple domains.

The management of research must ensure that the resources are directed to investigations consistent with

the ultimate goal, the development of a successful drug. The management of

research is full of uncertainty and complexity. Research has substantial

elements of creativity and innovation and predicting the outcome of research in

full is therefore very difficult. The costs and risks involved in developing,

testing and bringing new drugs to market continue to grow, pharmaceutical

companies are coming under increased pressure to make the discovery and

development process more manageable and efficient. Today we need both better

processes as well as better science to succeed in the disease jungle or the

pathogen minefield. Success will go to those who can manage the hybrid

activities between science, technology, and the market.

Although innovative and

sound research is a prerequisite, it is ultimately the therapeutic success of the drug

which results in sales and profits. And it is usually proprietary (patented) products which earn

the highest returns, because they produce sustainable competitive advantage

over a substantial period of time (e.g. patent lifecycle). We must keep in mind

that it is the ability to produce proprietary products, not just interesting

science, which leads to a profitable and sustainable pharmaceutical company.

The business process around

the drug discovery and development process itself can be improved as well as

the R&D process management itself (e.g. Business Process Improvement,

process and portfolio management, ).

Reducing R&D costs and shortening product development cycles will certainly

contribute to an increase in profitability. But when the scientific substrate

of the R&D process itself is not optimised too, we leave a huge potential

for treating diseases cost-effectively and generating profit untapped.

Both the process and its content require our attention. The recent gulf of mergers

an acquisitions provides some short-term relief, but when we combine two companies

with each a 90% attrition rate in drug development, we just get a bigger

company with also a 90% attrition rate in drug development. This high attrition rate leaves

little margin for dramatic improvement of overall productivity. At the moment the

pharmaceutical industry is trying to generate some leverage by working on

improving the development process for the 10% developmental drugs which

make it through the pipeline. Business process engineering as such is less riskier

than rethinking the overall discovery and preclinical development process. Business

process improvement can be modeled on what was done in the automotive and aerospace industry

when those sectors faced hard times. Due to the success of its blockbusters in the 1990s, the

pharmaceutical industry only recently faced the same challenges.

I will focus on the content

of the drug discovery and development process. How can we scrutinize the

R&D projects earlier in the preclinical development process to help minimize

the risks involved in clinical development of new drugs (now down to 10% success rates)?

We need a better process content in relation to clinical reality, not only more content

as such.

Drug discovery and development:

an inefficient process

At the end of the drug

discovery and development pipeline, there are patients waiting for treatments,

company presidents and shareholders waiting for profit and governments trying

to balance their health care budget. For pharmaceutical and biotech companies,

the critical issue is to select new molecular entities (NME) for clinical

development that have a high success rate of moving through development to drug

approval. Finding new drugs (which can be patented to protect the enormous

investments involved) and at the same time reducing unwanted side effects is

vital for the industry. We must try to understand the reasons for failure in

clinical development in order to improve drug discovery and

preclinical development.

Figure 5. Evolution of Total Sales and R&D Spending Source: Pharmaceutical Research and Manufacturers of America (PhRMA) Pharmaceutical Industry Profile 2004 (Washington, DC: PhRMA, 2004) |

Figure 6. Evolution of Total Sales and percentage of R&D Spending Source: Pharmaceutical Research and Manufacturers of America (PhRMA) Pharmaceutical Industry Profile 2004 (Washington, DC: PhRMA, 2004) |

Figure 7. Evolution of Research and Development Spending Domestic and Abroad Source: Pharmaceutical Research and Manufacturers of America (PhRMA) Pharmaceutical Industry Profile 2004 (Washington, DC: PhRMA, 2004) |

Figure 8. Evolution of Research and Development Spending and NDAs submitted Source: Pharmaceutical Research and Manufacturers of America (PhRMA) Pharmaceutical Industry Profile 2004 (Washington, DC: PhRMA, 2004) FDA CDER NDAs received per year |

The demand for innovative medical treatments is constantly growing as people in the

wealthy developed world live longer with a concomitant increase in the burden

of chronic diseases. At the same time, patient expectations about the quality

of treatment and care they receive are rising and unmet medical needs

remain high. There still are significant pharmaceutical gaps, that is, those diseases

of public health importance for which pharmaceutical treatments either

do not exist or are inadequate. What can modern society expect from its pharmaceutical industry to

deal with the challenges arising? Let us take a look at the evolution in income

of the US pharmaceutical industry and output over the last 30 years, from 1970 to 2003.

The total sales of the US pharmaceutical industry has risen almost exponentially

over the past 30 years (Figure 5). About 16% of sales income is spent

on R&D (Figure 6), which makes R&D, after marketing costs,

the second biggest item in the spending profile of large pharmaceutical companies.

The percentage of sale income spent on R&D has risen

from 9.3% in 1970 to 15.6% on 2003, a rise of 6.3%.

The total amount of money spent on R&D has risen

enormously since 1970, mostly in the US (Figure 7). In 2003, almost half of all R&D

spending worldwide was made in the USA.

However despite this almost exponential rise in total R&D

spending, the number of NDAs approved by the FDA has not risen significantly (Figure 8).

The money invested in R&D has not lead to an equal rise in output of the R&D process.

Due to the increasing mismatch between rising R&D expenditure and decreasing R&D

efficiency (Figure 8) the overall profit margins of the pharmaceutical industry

are decreasing (Figure 3).

Whatever the phrmaceutical industry spends on R&D, it has a significant overhead of additional manpower to sustain.

In 2000 the US pharmaceutical industry directly employed 247,000 people (down form 264,400 in 1993), with 51,588 of them

working in R&D, which means only 21% of its workforce is directly involved in the drug

discovery and development process (Kermani F., 2000; PhRMA 2002). In 2000 the European pharmaceutical industry

employed 560,000 people of which only 88,200 worked in R&D, which is 16%.

(EU source: The European Federation of Pharmaceutical Industries and Associations (EFPIA)

and The Institute for Employment Studies (UK)).

In 1990 the European pharmaceutical industry directly employed 500,762 people (76,287 in R&D or 15,2%). It took

10 years to increase total employment to 540,106 people (of which 87,834 in R&D or 16,3%, conflicting data),

but then it took only 3 years to increase total employment up to 586,748 (of which 99,337 in R&D or 16,9%).

The industry is not capable to reduce its overhead and to significantly increase its new drug generating workforce in

relation to its total employment. From 1990 to 2003 the European pharmaceutical industry icreased its workforce with 85,986

, but added only 23,050 for R&D.

The expenditures in R&D grow faster than its R&D workforce which indicates that

money is being spent mainly on equipment (e.g. for HTS), but which fails to sustain the growth of productivity in the

end (Figure 8). Ubiquity does not equal overall process efficiency and effectiveness.

Two elements which are often overlooked in the discussions about the increasing cost and duration

of R&D: tax returns and the

US Public Law 98-417

(the Hatch-Waxman Act) which was enacted in 1984.

Pharmaceutical companies in general spend a certain amount of the revenues on R&D because of its impact on

tax returns, so the cost is not the only driver. When sales increase tax deductions are important incentives to spend part

of the revenue on investments in R&D (Figure 6). But in the end, the new investments have to support

further growth, which is not always the case.

The "Drug Price Competition and Patent Term Restoration Act" (1984) was intended to balance two important public policy goals.

First, drug manufacturers need meaningful market protection incentives to encourage the

development of valuable new drugs. Second, once the statutory patent protection and

marketing exclusivity for these new drugs has expired, the public benefits from the

rapid availability of lower priced generic versions of the innovator drug

(Abbreviated New Drug Applications or ANDA).

One aspect of the "Drug Price Competition and Patent Term Restoration Act", the

"Patent Term Restoration"

refers to the 17 years of legal protection given a firm for each drug patent.

Some of that time allowance is used while the drug goes through the approval

process, so this law allows restoration of up to five years of lost patent time.

Under the

Hatch-Waxman Amendments,

patent protection can be extended (under certain conditions)

for up to 28 years, about 11 years of extra protection compared to the 17 years originally granted by US law.

The regulations governing the Patent Term Restoration program are located in the

Code of Federal Regulations (CFR),

Title 21 CFR Part 60.

The Uruguay Rounds Agreements Act (Public Law 103-465), which became effective on June 8, 1995,

changed the patent term in the United States. Before June 8, 1995, patents typically had 17

years of patent life from the date the patent was issued. Patents granted after the

June 8, 1995 date now have a 20-year patent life from the date of the first filing of

the patent application. Although pharmaceutical companies suffer from longer development cycles,

tax incentives and extended patent protection lessen the impact on their business results. The patients

are the true losers of the game, because they have to wait longer for new drugs for unmet medical needs.

Instead of creating a long-winded and inefficient process, medicine would be served better with a shorter and

more productive process.

Figure 9. Evolution of R&D spending allocation Source: Pharmaceutical Research and Manufacturers of America (PhRMA) and Source: USA NSF Division of Science Resources Statistics (SRS) |

Although overall R&D spending has increased over the years, there has been a remarkable shift in the

allocation of R&D spending. Clinical development spending has increased significantly

(Figure 9), while spending on applied research (i.e. preclinical) has decreased.

Basic research spending shows an increase in recent years. The overall picture is an increased

spending on clinical development, while there is less spending on the processes feeding clinical

development with appropriate development candidates. Mainly the investments

in applied research, which is the bridge between basic research and clinical development shows

signs of neglect. The Early Development Candidates (EDC) were expected to require less preclinical

validation than before?

The cost to develop a single drug which reaches the market has increased tremendously in recent years

and only 3 out of 10 drugs which reached the market in the nineties generated

enough profit to pay for the investment (DiMasi, J., 1994; Grabowski H, 2002; DiMasi JA, 2003). This is mainly due to the low efficiency and

high failure rate of the drug discovery and development process.

Pharmaceutical companies are always trying

hard to reduce this failure rate. Indirect losses in drug development caused by

a failure in drug discovery are among the most difficult to quantify but also

among the most compelling in the riskmitigation category.

Pharmaceutical companies want to find ways to bring down the enormous costs

involved in drug discovery and development (Dickson M, 2004; Rawlins MD.,

2004).

Only about 1 out of 5,000

to 10,000 drugs makes it from early pre-clinical research to the market, which

is not an example of a highly efficient process. The focus of the

pharmaceutical industry on blockbuster drugs is a consequence of the mismatch

between the soaring costs and the profits required to keep the drug discovery

and development process going. The blockbuster model now delivers just 5%

return on investment and only one in six new drug prospects will deliver

returns above their cost of capital. The "nichebuster" is now an

emerging model for the post-blockbuster era.

Only diseases with patient

populations large enough (and wealthy enough) to pay back the costs for a full

blown drug development are now worth while working on. Research for new

antibacterial drugs is being abandoned, due to an insufficient return on

investment (R.O.I.) to pay for the development costs of new drugs (Lewis L,

1993; Projan SJ., 2003; Shlaes DM., 2003). If the industry cannot bring the

costs down, it may as well try to raise its income by changing its price

policy, but this shifts the solution for the problem from in- to outside the

company and places the burden on the national health care systems.

Companies which were more

successful in the past achieved a higher efficiency even without the

availability of extensive genomic and proteomic data and new low-level disease

models. The founder of Janssen Pharmaceutica,

Paul Janssen, PhD, MD (1926-2003),

in his early days achieved a ratio of 1 drug for every 3,000 molecules screened. Over the years he and his

teams developed about 80 drugs (out of 80,000 molecules, so 1 drug for 1,000 molecules screened)

of which 5 (6.3%) made it to the

WHO Model List of Essential Medicines.

He worked in fields as diverse as gastroenterology, psychiatry, neurology, mycology and

parasitology, anaesthesia and allergy. As a scientist he has been one of the most highly productive

and widely esteemed pharmacological researchers in the world for more than 45 years.

He had a deep understanding of both drug discovery and drug development. Dr. Paul Janssen

had always been the personification of a unique combination: on the one hand the

brilliant scientist, and on the other the very successful manager. Let us take a look at his approach to

active strategic management which

requires active information gathering and active problem solving. Dr. Paul Janssen practiced

Management By Walking Around (MBWA), which gave him access to all the research going on and

allowed him to orchestrate the efforts of his scientists, from discovery up to Phase III,

like a conductor and thereby avoiding silo development.

A deep understanding of a wide range of issues is required to bring a drug from early drug

discovery to the market. Introducing new technology and generating more data alone are not

sufficient to improve the drug discovery and development process (Drews J. 1999; Horrobin DF,

2003; Kubinyi H., 2003; Omta S.W.F., 1995). We need better content and understanding, not just

more targets and data to be fed into the preclinical and clinical development process. As such

the present-day discovery process, suffers from molecular

myopia as it lacks the big picture

understanding of disease mechanisms in man. In contrast the more traditional physiology based process, suffered from

system-wide

presbyopia as it lacked molecular resolution. The ideal approach would

be the combination of both, which has the potential to improve both the quantity as well as the quality of the process.

Quantity without a match in content quality (clinical relevance) leads to failure later on in the

drug development pipeline. We have to look at drug discovery

and preclinical development with clinical drug development and the patient in mind. Look back from

clinical reality into the drug discovery and development process and analyse its failures. A process which in the end

fails to prove its value in man should be changed.

The drug discovery and development process

Let us now take a closer look at the evolution of the output of the drug discovery and development over the years. How does the productivity of the process evolves? What is the cost/benefit ratio of the investments made and the overall outcome for drug discovery and development.

Figure 10. NDAs submitted over the years. Source: FDA CDER NDAs received and NMEs approved. |

Figure 11. Evolution of INDs and NDAs over the years. NDAs left axis, INDs right axis. Source: FDA. |

The number of NDAs submitted does not show a significant increase in recent years (Figure 10),

compared to almost 20 and 30 years ago in the days of physiology based drug discovery. The number of

approved New Molecular Entities (NME) shows a sharp decrease in the early sixties, due to the more stringent

regulations for drug safety testing because of the

Thalidomide scandal. About 66% of the NMEs did not make it

anymore when better testing was required by the FDA and the pre-Thalidomide productivity was never reached again.

The number of NMEs was at its lowest at the end of

the sixties (9 in 1969) and has slowly increased since the early seventies (Figure 10). NMEs

are about 25% of all NDAs, before halfway the eighties it was on average less than 20%.

The number of NDAs is not the only

indicator of success for the pharmaceutical industry. Blockbusters generate higher sales per product, so

both the number of NDAs as well as the sales per marketed drug are important indicators.

Depending on a blockbuster makes a company vulnerable to problems (SAE) with a single drug and

patent expiry of a blockbuster has a bigger impact.

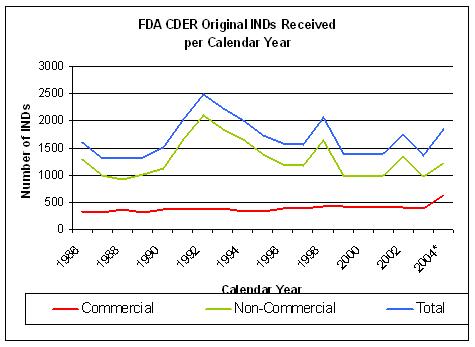

In figure 7 we can see that the number of INDs and NDAs submitted over the years, does not show a significant improvement.

The larger than average number of approvals in 1996 reflects the implementation of the

Prescription Drug User Fee Act (PDUFA).

The number of INDs does not show a significant increase over the years, so the overall productivity of drug

discovery and development has not improved, despite the high investments in Research and Development (Figure 7 and

Figure 11). The number of active INDs shows an overall increase, but this only means that

the drug development pipelines are filling up because the clinical trials take longer. The in- and

outflow of drug development (INDs and NDAs) has not changed in a way to explain the increase in active INDs.

The pharmaceutical industry itself expects that products will stay in phases longer than has historically

been the case, lowering the probability of a product moving from one phase to another in a particular year.

We have not seen a proportional increase in NDA submissions to the FDA, compared to the number of active INDs

(Figure 10 and 11).

The main reasons for declining productivity of drug development are:

- Tackling diseases with complex etiologies, which are not well understood.

- Demands for safety, tolerability are much higher than before.

- The proliferation of targets is diluting focus.

- Genomics has been slow to influence day-to-day drug discovery.

- A negative impact of mergers on R&D performance?

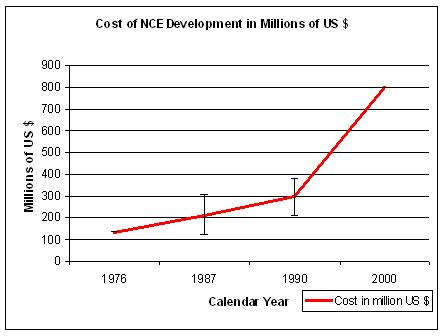

Figure 12. Sources: for 1976 Hansen, 1979; for 1987a Wiggins, 1987; for 1987b Woltman, 1987c, for 1987c DiMasi, 1991; for 1990a and b OTA pre-tax, for 2000 DiMasi, 2003. Differences are also due to out-of-pocket versus capitalized costs. |

Figure 13. Source: FDA CDER INDs received per year |

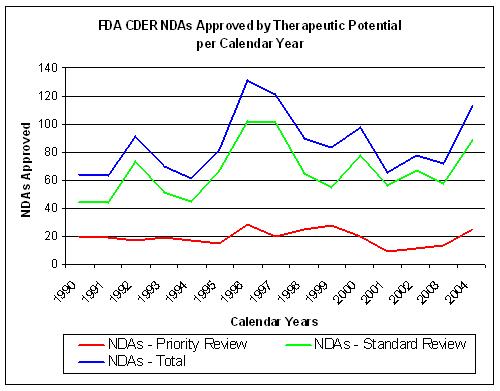

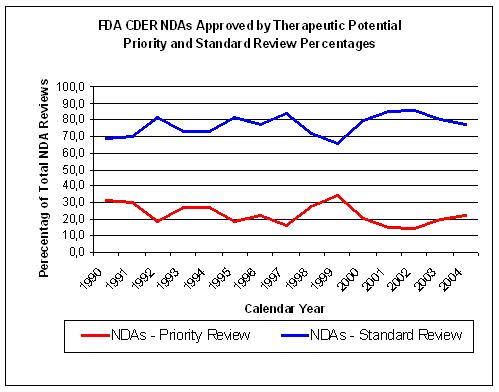

Figure 14. Source: FDA CDER NDAs approved per year |

Figure 15. Source: FDA CDER NDAs approved per year |

Let us now take a closer look at the drug discovery and development process

(clinical trials).

Although different sources give different outcomes, the trend is one of increasing costs and reduced Return On Investment (R.O.I.).

In 2000 it took about US$ 500 to US$ 802 million to develop a new drug and bring it to the market (DiMasi J.A., 2003),

which is a significant rise since 1976 when it cost about US$ 137 million (all numbers in year 2000 US$) (Figure 12).

These estimates include opportunity costs, which are lost profits that could have been realized if the money tied

up in an enterprise had been invested elsewhere (DiMasi J.A., 2003). Almost half of the

DiMasi (Tufts) US$ 802-million

figure - $399 million - is comprised of this "cost of capital", leaving a figure of US$ 403 million for

direct out-of-pocket expenses, most of which is expended in clinical trials. Whether you favor US$ 403

million or US$ 802 million, the cost of drug discovery and development is far too high.

When we look into the diferent stages of drug discovery and development,

the US$ 802M costs are divided over: Discovery and preclinical testing US$ 335M, Phase I: US$ 141.7M,

Phase II: US$ 137.2M and Phase III: US$ 174M. The total cost for clinical development is

US$ 452.9M. The cost for FDA Review/Approval: US$ 13.8M.

The basic numbers for time

spent and costs made in drug discovery and development can be found in several

documents published by institutes which generate reports about the

pharmaceutical industry (Boston Consulting Group,

Tufts Center for the Study of Drug Development,

Pharmaceutical Research and Manufacturers of

America (PhRMA), the Institute for Regulatory Science (RSI),

CMR International, etc.).

IMS is a source for pharmaceutical market information. The

Association of Clinical Research Professionals (ACRP)

and the Center for Information and Study on Clinical Research

Participation (CISCRP) provide information on clinical trials.

To be complete, there are

alternative views

which criticize the calculation of the cost of drug discovery and development.

Here are the

Public Citizen and

TB Alliance reports.

A discussion of these reports and the Tufts study can be found

here.

Although an open and critical discussion is the only way to understand complex

issues such as research and development costs, the discussion sometimes loses its focus and becomes tainted by

sophisms

to support political and personal agendas. I leave it to the critical reader to decide.

The consequence of accepting the alternative views would be that the

pharmaceutical industry would be losing money due to costs outside its core

mission, which is even worse, because research and development can be improved,

but this would not help in this case. The result is in each case, that drugs

are only worth while to develop, if they have an enormous market potential

(large numbers of wealthy patients),

require as little as possible investments (me-too drugs and generics)

and have the shortest development cycle possible (less complex diseases).

Otherwise they do not earn back the money invested, when finally they

reach the market. This leads to an increasing focus on typical Western "diseases"

such as obesity or hypercholesterolemia, due to overintake of food and

unhealthy living. Tropical diseases, if they do not make it to the wealthy

world, are to be avoided. You cannot blame the pharmaceutical industry,

because if they do not live up to the expectations of their shareholders, they

are punished by a decreasing stock-value (see Figure 1).

The number of INDs coming out of drug discovery does not show a significant improvement since 1992

although overall costs have risen sharply.(Figure 13 and 14).

The non-inovative drugs get a standard review by the FDA instead of a priority review and

constitute about 75% of all NDA submissions (Figure 15).

About 10-20 % of the total costs are due to the drug discovery process, the rest is

caused by drug development, production and marketing costs. Clinical development

costs, on average US$ 467 million, which makes up more than half the total cost.

The cost of a Phase I clinical trial is about US$ 15.2, for Phase II it costs about US$ 16.7 and

Phase III US$ 27.1 (in 2000 US$, DiMasi J.A., 2003). The cost of a Phase III clinical

trial ranges between US$ 4 million and US$ 20 million and you need at least two of

them (Kittredge C, 2005).

Study delays, such as slow patient recruitment, protocol amendments and review

processes, are contributing factors. Every day that a drug is prevented from being

on the market means a loss of sales, which in the case of blockbuster drugs can be

as much as US$ 45 million per day.

From about 8 years in the

1960s it now takes an average pharmaceutical company about 10 to 15 years to

bring one new drug to the market. Of these 15 years about 6.5 years or 43%

of the total time is spent in pre-clinical research.

Development starts with candidate/target selection or the selection of a promising

compound for development. Pre-clinical and

non-clinical research involves necessary animal and bench testing before

administration to humans plus start of tests which run concurrently with

exposure to humans (e.g. two-year rodent carcinogenicity tests).

About 7 years or 46 % of the total time is time spent in clinical research (1.5 years

in Phase I, 2 years in Phase II and 3 years in Phase III). Phase I (First Time In Man, FTIM) of a clinical trial

deals with drug safety and blood levels in healthy volunteers (pharmacology). Phase II (Proof of concept, PoC) deals

with basic efficacy of a new drug, which proves that it has a therapeutic value

in man (exploratory therapeutic). Finally Phase III deals with the efficacy of the drug in large patient

populations (confirmatory therapeutic). It is easy to understand that the increase of the population used

to study the effect has a dramatic impact on the complexity and the cost of the

clinical trial.

To process a New Drug

Application (NDA) takes the U.S. Food and Drug

Administration (FDA) on average 1.5 years based on the results and

documents provided by the pharmaceutical industry. The situation in

In the 1990s about 38 % of the drugs which

came out of discovery research dropped out in phase I. Of those molecules which

made it out of phase I, 60 % of those failed in phase II clinical

studies. And now we get to the really expensive phase III in which 40 %

of the remaining candidates failed. Of those drugs which made it out of phase

III to the FDA 23 % of the ones that made it through the clinical trials

failed to be approved by the FDA. All this translates to about 11 %

overall success rates from starting the clinical trials (

Figure 16. Less than 10% of INDs make it to an NDA. Source: FDA CDER NDAs approved per year and FDA CDER INDs received per year |

Figure 17. Overall success of clinical development decreased from 18% to 9%, worst decline in Phase II (effectiveness), from 46% to 28% Source: Loew C.J., PhRMA, HHS Public Meeting, November 8, 2004 |

Figure 18. Evolution of attrition from 1995 to 2004. Source: Pharmaceutical Research and Manufacturers of America (PhRMA) |

Figure 19. Trends in probability of success from 'first human dose' to market by therapeutic area. Source: Pharmaceutical Research and Manufacturers of America (PhRMA) |

As a rough indication of overall inefficiency we

can compare FDA NDA and IND data five years different. If we take on average 5 years from IND (IMP in Europe)

after 5 years of IND filing, less than 10% of INDs make

it to an NDA (Figure 16). The evolution of NDA approvals also shows a decline over the years.

In recent years overall success rates for clinical development decreased from 18% to 9% (Figure 17).

This is mainly due to an almost 40% reduction of success in phase II clinical trials, which means a failure in

exploratory treatment or clinical activity. A Phase II clinical trial is intended to determine activity, it does not yet

determine efficacy, which is the goal of a Phase III clinical trial. Thus the outcome of Phase II is a decisive

point in a drug's development. If we look at the evolution of attrition rates from 1995 to 2004, we see an

overall increase in development candidates in preclinical development and an increase in Phase I and II development

(Figure 18). There is no significant increase in Phase III clinical trials, as most developmental

drugs increasingly fail in Phase II. The drugs show an activity in drug discovery and preclinical development,

but no significant activity in a clinical situation on a real-life disease process. The increase in attrition

is not the same for every therapeutic area (Figure 19). For alimentary and metabolic diseases

the probability of success (POS) is even increasing and is about then times as high as for the nervous system (1999).

The high success rate of anti-infectives is also caused by the fact that we are capable to make a valid representation of

the entire target system (bacteria) in a "test-tube" or "petri-dish" early on in the process,

and not only a billion-fold reduced representation of the human biosystem. As long as the dominant view on

applied science remains that a set of molecules in a "test-tube" can represent the complexity of

system (reductionism) our models will disappoint us at the end of the process when there is no

escape from the complexity and variability of man and human population. Leaving too much of the original

system out of an experiment brings too much flaws into the experiment. Elimination without confirmation

of validity against the original condition gives flawed results (and late stage attrition).

The significance of increasing Phase II failures is a new evolution, as in the

1980s and early 1990s the failure rates remained relatively steady. The failure rate of new clinical

entities (NCEs) remained relatively steady through the 1980s and early 1990s (DiMasi J.A., 2001).

Among NCEs for which an investigational new drug (IND) application was filed in 19811983,

approval success rates were 23.2%; 19841986, 20.5%; 19871989, 22.2%; and

19901992, 17.2%. This includes both self-originated and acquired NCEs. According to the FDA

historically 14% of drugs that entered Phase I clinical trials eventually won approval, now 8% of

these drugs make it to the marketplace, and that half of products fail in the late stage

of Phase III trials, compared to one in five in the past

(Crawford L.M., 2004).

"...In the past, we used to see a 20 % product failure in the late stages of the Phase 3 trials.

Currently, the failure ratio at this stage is 50 %. The reason for this unpredictability,

in our analysis, is the growing disconnect between the dramatically advancing basic sciences

that accelerate the drug discovery process, and the lagging applied sciences that guide the

drug development along the critical path. ..."

(Crawford L.M., 2004). Overall

late stage attrition is on the rise, but how should preclinical development and Phase I clinical trials

predict success or failure in Phase II or III, when they are not conceived or designed to do this?

Each stage from discovery over preclinical development to clinical development is meant to provide

an answer for a particular question, not for the question arising in the next stage of the discovery

and development pipeline. Which elements or markers in preclinical development would allow us to

predict events in clinical development. In order to achieve this we need a better understanding of the critical

issues in the clinical disease process. The analysis of failures in Phase II should at least help us

to understand the mechanism of these failures in order to feed those lessons back into preclinical

development. The transition from preclinical development to Phase I and Phase I itself deals with finding a

appropriate dosing scheme to start with (e.g. MTD Maximum Tolerated Dose), but not yet with clinical

activity, which comes into play at Phase II.

There are some practical considerations to determine the clinical activity of a developmental drug,

one of which is the sample size. The study design (case/control, cohort study, RCT, etc.) is the

first decision, but sample size is a close second.

An important issue is the power of the trial. Once the level of activity that is of interest has been decided

on, one should design a trial that exposes the fewest possible patients to inactive therapy,

e.g. by appplying the method of Gehan and Schneiderman (Gehan E.A., 1990). In general you need more patients

when you want to find out about a smaller therapeutic effect. This is an important cause of the

overall increase in patient numbers required, depending on what you want to prove. When we cannot achieve a

dramatic therapeutic breakthrough with a diseases, a small improvment is what we want to prove. Instead of a

revolutionary breakthrough, quite often therapeutic improvements are only incremental. Let me clarify this with an example.

When Louis Pasteur (1822 1895)

developed a vaccine against rabies, the shortterm outcome was clear, either you died or you survived. Rabies is

a viral disease with about 100% mortality, i.e. you almost always die when you get the disease.

So the therapeutic effect was very simple to assess, which also made complicated analysis of the therapeutic

results less necessary. There was also less consideration about possible side effects, as dying from rabies was

a horrible disease process.

Let us now take a look at Alzheimer's Disease (AD) (named after

Alois Alzheimer), a debilitating degenerative disease

of which the pathological process is still not well understood. We cannot achieve a "restitutio in integrum"

(restoration to original condition) and regrow the brain cells which are lost due to the disease process.

So, now we can decide to wait until we know all about the process and then start developing a cure. This would mean that

in the mean time we do nothing to help whatsoever. As you can understand, this is not a valid option.

In the mean time, therapy is aimed at slowing down the process of mental deterioration. This however is a

more subtle outcome than the short-term live or die outcome in the case of rabies. These less than 100% success

rates make it harder to prove the success of a new therapy. The need to prove a small improvement, makes clinical trials

more complex and much larger.

Figure 20. Sample size (N) for comparing two means. In addition to α and β, N only depends on Δ/σ, or the effect size. α = 0.05 and 1 - β = power = 90% for a 2-sided test. The graph shows N as a function of Δ/σ = difference in units of s.d. |

Figure 21. Sample size (N) for comparing proportions (p). In addition to α and β, N depends on p and Δ. Let α=0.05, 1- β = power = 90%, 2-sided testing, p=0.5 (conservative estimate for variance). The graph shows N as a function of Δ = difference p1-p2, e.g. 0.2 = 0.6 - 0.4 |

Designing a clinical trial is not a trivial endeavour as the days

of Louis Pasteur are gone and the environment in which to develop new therapies has changed dramaticaly. A clinical trial

requires careful design in order to be able to answer the research question (hypothesis) with some confidence in the answer.

You want to prove that a new therapy works in a reliable way. Traditionally, H0 is the hypothesis that includes equality or the

expectation that nothing will happen and the alternative hypothesis H1 that something significant will happen (Rosner B, 1995).

A p-value is a measure of how much evidence we have against the null hypotheses. The significance test yields a p-value that

gives the likelihood of the study effect, given that the null hypothesis is true. A small p-value provides evidence against

the null hypothesis, because data have been observed that would be unlikely if the null hypothesis were correct.

Thus we reject the null hypothesis when the p-value is sufficiently small. However life in clinical trilas is not that simple.

There are two type of statistical errors you can make in a trial. A Type I error occurs

when you reject H0 when H0 is true, i.e., you declare a significant difference when the result happened by chance

(false positive - a drug will be used while it is not effective). A Type II error occurs when you accept H0 when H1 is true, i.e.,

you say there is no significant difference when there really is a difference (false negative - a drug will not be used while it has an effect).

How do we deal with these issues? While we cant prevent the possibility of incorrect decisions, we can try to

minimize their probabilities. We will refer to alpha (α) and beta (β) as the probabilities of Type I and

Type II errors, respectively.

or

An interesting element of a trial is the power of the trial. A study can have too little power to find a meaningful difference,

when the sample size is too small. No significant difference is found and the treatment or method is discarded when

it may in fact be useful. The alternative Hypothesis (H1 or Ha) is that there will be a significant (therapeutic) effect.

The P(Type II error) = β and β depends on how large the effect really is. The power (P) of a test is the probability that we

reject the null hypothesis given a particular alternative hypothesis is true and Power = 1 - β. Summarized:

β = Probability(missing the difference) and Power = Probability(detecting the difference).

All this comes down to the overall rule that in order to prove a small decrease in disease progression we need

a relatively large number of a patients. It is because of this kind of effect, the size

of patients in clinical trials has risen dramaticaly in recent years. Also in the case of rabies, there was no

effective treatment to compare with, so the comparison was straightforward and simple. In Figure 20 and

Figure 21 you can see for two different types of trials, the effect of sample size required to detect an increasing

difference. This is an important reason for having patient populations of up to 5,000 patients in Phase III clinical trials.

If we could make a big difference with a treatment, then we would not need such large numbers of patients to prove our case.

With a chronic degenerative disease, reducing the speed of progress of the disease with only 0.1%, could

mean that in 20 years thousands of people would benefit (longevity in the Western world), but the problem is that you must

prove this small difference within the scope of a clinical trial. This is one of the most important reasons for clinical trials

to become increasingly global in nature and more complex in protocol design.

The difference with the 19th century is also that we now have to compare with drugs which are already

on the market and have a proven therapeutic effect. The pharmaceutical industry is increasingly challenging itself to improve

against its own therapeutic success of the past. As such the pharmaceutical industry itself is the biggest problem for the

pharmaceutical industry. There is a lot more to be told on on clinical trial design, but this

is not within the scope of this article. The main issue is that in modern clinical development, the situation is more

complex to evaluate than before.

In inductive research, applying statistics has to be done with care. Expanding a trial population beyond

the boundaries of statistical relevance, may lead to spurious statistical significance but will not improve

the correlation to clinical relevance. By doing this we increasingly feed the process with false positives

and increase the pressure towards the end of the pipeline. The basic principles of probability (significance)

and induction (relevance) should be taken into account when designing and performing experiments.

Figure 22. Despite a reduction in attrition due to pharmacokinetics issues, efficacy has not improved, since 1991. In 1991 40% of PK failures were caused by poorly bioavailable anti-infectives, when we remove these from the equation, then only 7% of failures in 1991 were caused by poor ADME. Source: Pharmaceutical industry attrition profiles, evolution (Kennedy, T., 1997; Prentis RA, 1988). |

Figure 23. The major cause for failure, efficacy, only becomes apparent late in development. Source: KMR Group 1998 - 2000 |

What about the evolution of the basic reasons for attrition in drug development? Attrition due to a lack of efficacy

of drugs in development has not improved since 1991 (Kennedy, T., 1997; Prentis RA, 1988) (Figure 22).

Attrition rates due to poor pharmacokinetical profiles (PK) have dropped significantly, due to better preclinical

in-vitro and in-vivo models. However about 40% of failures in clinical development were due to inappropriate

pharmacokinetics of poorly bioavailable anti-infectives, if those were removed from the equation

then ADME was only responsible for 7% of failures in 1991 (Kennedy, T., 1997).

The basic numbers on attrition causes explain why attrition rates in Phase I clinical trials have declined less

than those in Phase II. Drugs with unfavorable PK profiles are now increasingly stopped before they reach

clinical development, so the ineffective ones now make it into Phase II in relatively larger numbers.

The clinical development attrition trends also show an unfavorable evolution since 1991 (Figure 22).

The disease models used in drug discovery and preclinical development fail to predict

failure in clinical development in about 80 to 90% of the drugs which enter clinical development.

And the combined predictive power of all clinical trials (Phase I to III) fails to predict failure in 1 out of

four or 25% or even 50% of all drugs submitted to the FDA for approval.

The major cause of attrition, efficacy, also shows up late in development, as preclinical development and

Phase I are unable to detect this failure. Preclinical development lacks the proper predictive models

and Phase I is not designed to detect a failure in efficacy. Clinical safety issues increase with

the number of people taking the drug, after it is on the market (Figure 23).

What can we learn out this

numbers and what is being done in drug discovery? The role of

absorption,

distribution, metabolism, excretion (ADME) and toxicity (ADMET) is an important part